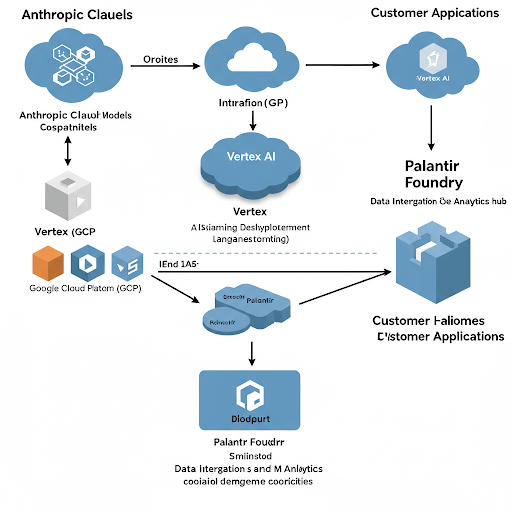

Read this article and asked Google Gemini to tell me what a solution architecture might look like involving Anthropic Claude, Google Cloud, and Palantir. There’s definitely some hallucination here in the pictorials, but this is quite brilliant.

I’m excited about the new developments, but also thinking about cybersecurity implications. How will this massive undertaking be locked down?

So I asked Gemini and got this response:

“Understanding how someone might attempt to compromise this type of architecture involves considering potential vulnerabilities at each layer and in the interactions between them. Here’s a breakdown of potential attack vectors, keeping in mind that robust security measures should be in place to mitigate these risks:

- Anthropic Claude Models:

- Prompt Injection: Maliciously crafted prompts could potentially trick the Claude models into performing unintended actions, revealing sensitive information, or bypassing security controls. This is a common concern with LLMs.

- Model Evasion: Attackers might try to craft inputs that exploit weaknesses in the model’s training data or architecture to bypass safety filters or generate harmful content.

- Data Poisoning (Less Likely in this context but a general LLM risk): If the models were continually fine-tuned on external data sources, a malicious actor might attempt to inject biased or harmful data to influence the model’s behavior. However, in a controlled deployment like this, it’s less probable.

- Google Cloud Platform (GCP) and Vertex AI:

- Cloud Account Compromise: Gaining unauthorized access to the GCP account through compromised credentials (usernames, passwords, API keys) or exploiting vulnerabilities in GCP services could allow attackers to control resources, access data, and potentially manipulate the deployment of Claude models.

- IAM (Identity and Access Management) Exploitation: Weak or misconfigured IAM policies could grant excessive permissions to unauthorized users or services, allowing them to interact with Vertex AI and other resources in unintended ways.

- Vulnerabilities in Vertex AI Services: Although Google Cloud invests heavily in security, undiscovered vulnerabilities in Vertex AI services could potentially be exploited to gain unauthorized access or execute malicious code.

- Network Security Issues: Misconfigured firewall rules or network segmentation could allow unauthorized access to Vertex AI endpoints or the underlying infrastructure.

- Palantir Foundry:

- Foundry Account Compromise: Similar to GCP, compromising user accounts or service accounts with access to Foundry could grant attackers the ability to view, modify, or delete data, as well as potentially influence the data pipelines used to prepare data for Claude or analyze its outputs.

- Data Injection/Manipulation: If attackers gain access to data sources ingested by Foundry or the data pipelines themselves, they could inject malicious data that could then be fed to Claude, potentially influencing its behavior or the insights derived.

- Exploiting Foundry Vulnerabilities: As with any complex software, vulnerabilities in the Foundry platform itself could be exploited to gain unauthorized access or execute malicious code within the environment.

- API Security Weaknesses: If Foundry exposes APIs for integration with other systems, weaknesses in these APIs (e.g., lack of proper authentication or authorization) could be exploited.

- Customer Applications:

- Application-Level Vulnerabilities: Common web application vulnerabilities (e.g., SQL injection, cross-site scripting, insecure deserialization) in the customer-facing applications that interact with Claude or Foundry could be exploited to gain unauthorized access or manipulate data.

- API Key Exposure: If API keys used to access Claude or Foundry are embedded insecurely within customer applications, they could be stolen and used by malicious actors.

- Social Engineering: Attackers might try to trick users of the customer applications into revealing credentials or performing actions that could compromise the overall system.

Interactions Between Components: - API Security: The security of the APIs used for communication between Vertex AI, Foundry, and customer applications is critical. Weak authentication, authorization, or insecure data transfer mechanisms could be exploited.

- Data Flow Vulnerabilities: Attackers might try to intercept or manipulate data as it flows between these different components.

General Security Best Practices:

It’s crucial to understand that a robust security posture relies on implementing a layered defense approach, including: - Strong Authentication and Authorization: Using multi-factor authentication, principle of least privilege, and robust identity management.

- Regular Security Audits and Penetration Testing: Identifying and addressing potential vulnerabilities proactively.

- Data Encryption: Protecting data at rest and in transit.

- Network Segmentation: Isolating different components of the architecture to limit the impact of a breach.

- Security Monitoring and Logging: Detecting and responding to suspicious activity.

- Keeping Software Up-to-Date: Patching known vulnerabilities in all components.

- Secure Development Practices: Building secure applications and integrations.

In summary, “hacking into” this architecture would likely involve targeting weaknesses in one or more of these areas, aiming to gain unauthorized access to data, manipulate the AI models, or disrupt operations. A strong security posture across all components and their interactions is essential to mitigate these risks.”

Sources:

1. Palantir to Deliver Anthropic AI Application to Government

2. Google Gemini